tldr: The 2016 US election and the 2015 Brexit vote were no exceptions. Instead, they have convinced everybody how effective of a manipulation weapon Facebook is. So expect more manipulations, in elections and otherwise. P.S: No, it can’t be fixed.

This post was prompted by two excellent, but scary, articles on how the same people used the same technology, to sway popular votes in the UK and the US by means of Facebook.

- Time: “Inside Russia’s Social Media War on America“

- Guardian: “The great British Brexit robbery: how our democracy was hijacked“

Read them. This post will still be here when you are done :-)

I came away asking: how could Facebook be “fixed” so this kind of attack on democratic countries’ political processes would be impossible in the future?

After some pondering, here is my reluctant conclusion: it can’t be fixed. Facebook cannot be “fixed” in a way that prevents future hijackings of democracy. Instead, the more successful Facebook becomes, the more likely hijackings like that will happen again and again, more effectively every time. #Russiagate was only the beginning. And here’s why:

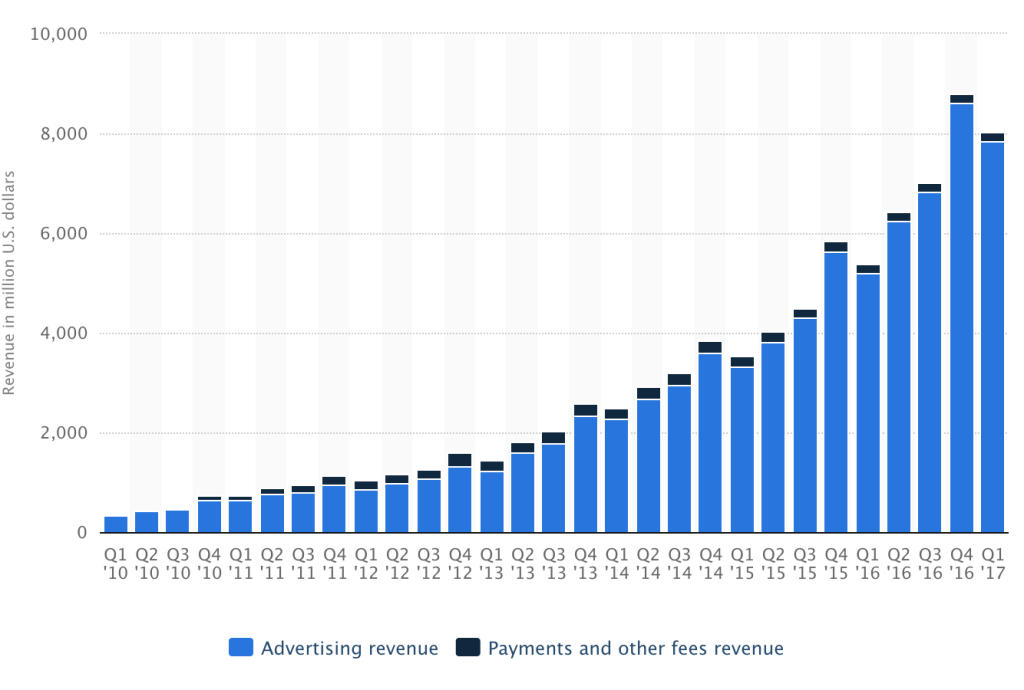

Let’s look at Facebook’s business. Revenue that does not come from ads is negligible, and not even growing:

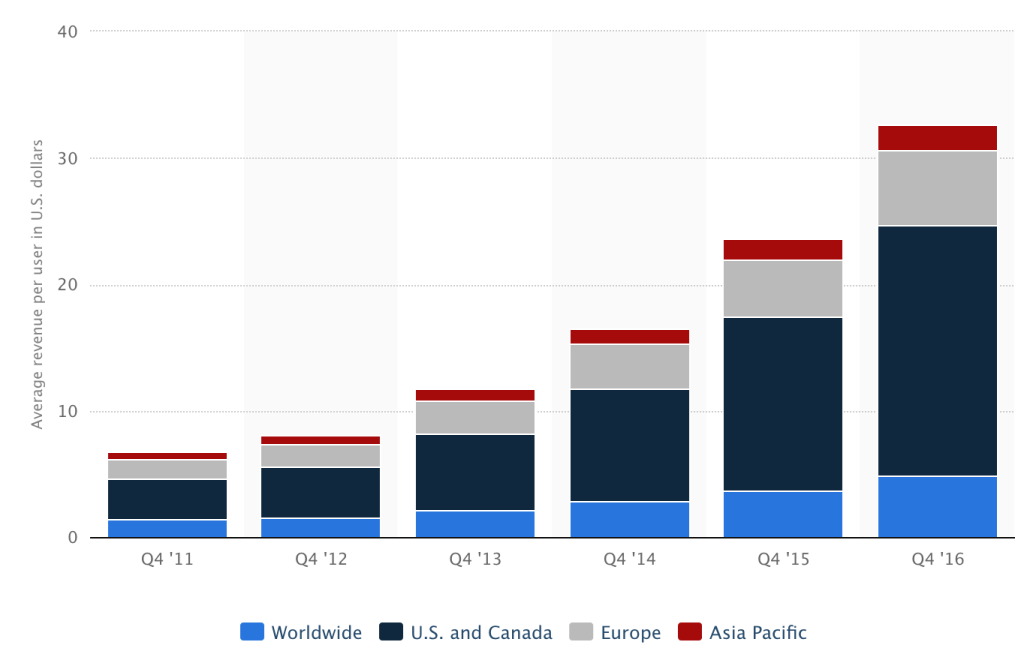

Revenue per user keeps growing strongly, to between $2 and $20 per user per quarter, depending on where in the world the user is located:

Because of this, Facebook is optimized for one thing, and one thing only: taking the message of those people who have one and are willing to spend money, and depositing it into users’ brains so that users will act differently than they otherwise would have. That’s what their business is, and that’s what the business is optimizing for; the numbers do not lie.

But people hate ads and if there are too many, users will revolt. So what can Facebook do to grow revenue per user?

- Improve the effectiveness of ads, i.e. not only getting the Coke ad in front of people, but only of those people who are most likely going to be influenced by that ad and then actually go out and buy a Coke they otherwise wouldn’t have.

- Creating longer and more intense “user engagement” on the site, so it can show you more ads than you realize because as a percentage of content that you see, it remains a low number. That, in turn, largely works by getting people to share lots of engaging content with their friends.

That latter part, in our context, is particularly insiduous, because nowhere does it say that those friends have to be real friends. They could be sock puppets or bots.

See how tailor-made this machinery is to swing an election?

- The machinery to identify the most likely switcher from Pepsi to Coke is there and getting more optimized all the time. Let’s use it for a political candidate.

- The machinery to actually make the user go out and buy a Coke is there and getting more optimized all the time. Let’s use it to get them to vote. Or not vote. Depending on your goal.

- The machinery to inject arbitrary content, free of all oversight, into the public discussion is there and getting better all the time: just hire a an army of “Facebook users” who “share” “their personal opinions”. And some of this can be automated with bots.

The kicker is that much propaganda masks itself as “free speech” by “normal Facebook users” and so cannot (and should not) be regulated.

What can we expect going forward? We can expect that Facebook will keep growing. Which means that their machinery of getting somebody’s messages into your brain, to cause changes in your actions, by definition, will have gotten better. Which means, of course: the next election will be manipulated, too. Just more so.

We need something else than Facebook that has the same advantages, but it is optimized for connecting with friends. Not for making propaganda more effective from people with an agenda and some money. It’s bad enough already. How will it be 10 years from now?